I will occasionally get together for a weekend of gambling with my family. It’s nothing very intense, and we all have a good time losing and winning quarters from each other. One of the games, however, is completely mystifying in its ability to take money from me, and that game is Acey Deucey. To help de-mystify the game, and maybe stop handing over so many quarters to my uncles, I set out to understand how the game works.

The game

Acey Deucey (also known reverently as El Diablo among my family) is a pretty straightforward game. It’s played as follows:

One player is the dealer, and stays so the entire round. They are able to deal to themself and play.

Each player antes one dollar into a central pot.

The dealer shows two cards to the player to their left. The player has two options:

They can bet (up to the amount of the pot) that the next card will be numerically between the two cards they will dealt.

They can pass.

For the sake of determining “between,” Aces are high if it’s the second card dealt or the deciding card. If the first card is an Ace, the player gets to choose if it’s high or low before seeing the second card dealt.

If the person bets, there are three possible outcomes:

The third card dealt is in between the first two. They take from the pot whatever they bet.

The third card dealt is outside the first two. They put into the pot whatever they bet.

The third card dealt is exactly one of the first two. This is called getting stung, and the player puts into the pot double what they bet.

If the player passes, play continues on to the next player. This continues until the pot is empty.

Expected value and probabilities with limited knowledge

So, the natural question arises: what’s a good bet? Or, what spread between two cards is needed to get a positive expected value? This is pretty easy to figure out, especially if you’ve been counting cards. But, things get a little tricky if you’re not so sure of the cards left in the deck (which, at least for me, is always the case.) I figure I can keep track of Aces and twos, but everything else is too tough. So, I set out to calculate the expected value of various hands, given only the two cards in front of you and the number of Aces and twos left in the deck.

Calculating the expected value per dollar is straightforward:

The trick lies in calculating the probabilities. This is not as straightforward as it might seem, because of our limited knowledge of only aces, twos, and the cards on the table. We do know a few things:

The sum of all probabilities must add to 1.

Each card that’s not a two, an ace, or one of the cards on the table must have the same probability.

The cards on the table have a 75% chance of the regular cards, because we know that one of the cards is gone, out of a possible 4.

We know the probability of drawing an Ace or a Two, because we know the number of cards in the deck and the number of Aces and Twos remaining.

We have a few different scenarios to consider, depending if either of the first two cards is an Ace or Two. I’ll write out the math for the case where neither of the face up cards is an Ace or Two, and then show the code for the rest.

Putting our three facts together, we can write

where p_0 is a constant. The first two terms are the probabilities of drawing either of the cards on the table. The third term is the probability of drawing one of the cards not on a table and not an Ace or Two. N_A, N_2, and N_T indicate the Aces, Twos, and Total cards remaining in the deck. Solving for p_0, we find that

Again, this calculation changes slightly depending if one of the first two cards is an Ace or Two. This is implemented in the function define_deck_odds(), whose inputs are the information about cards, and whose output is a data.table with the probability of drawing each card in the deck

define_deck_odds <- function(card1, card2, aces_left, twos_left, cards_left) {

# neither card is an ace or two

if (!(card1 %in% c(2, 14)) & !(card2 %in% c(2, 14)) & card1 != 1) {

p_0 <- (1 - ((twos_left + aces_left) / cards_left)) / 10.5

deck <- data.table(card = c(seq(2, 14)),

prob = c(twos_left / cards_left,

rep(p_0, 11),

aces_left / cards_left))

# weird edge case for completeness - if two cards are equal, then assign half

if (card1 == card2) {

deck[card1 - 1, prob := prob * .5]

} else {

deck[card1 - 1, prob := prob * .75]

deck[card2 - 1, prob := prob * .75]

}

# first card is an ace or two

} else if (card1 %in% c(2, 14) & !card2 %in% c(2, 14) & card1 != 1) {

p_0 <- (1 - ((twos_left + aces_left) / cards_left)) / 10.75

deck <- data.table(card = c(seq(2, 14)),

prob = c(twos_left / cards_left,

rep(p_0, 11),

aces_left / cards_left))

deck[card2 - 1, prob := prob * .75]

# second card is an ace or two

} else if (!card1 %in% c(2, 14) & card2 %in% c(2, 14) & card1 != 1) {

p_0 <- (1 - ((twos_left + aces_left) / cards_left)) / 10.75

deck <- data.table(card = c(seq(2, 14)),

prob = c(twos_left / cards_left,

rep(p_0, 11),

aces_left / cards_left))

deck[card1 - 1, prob := prob * .75]

# both cards are aces/2s

} else if (card1 %in% c(2, 14) & card2 %in% c(2, 14) & card1 != 1) {

p_0 <- (1 - ((twos_left + aces_left) / cards_left)) / 11

deck <- data.table(card = c(seq(2, 14)),

prob = c(twos_left / cards_left,

rep(p_0, 11),

aces_left / cards_left))

# if 1st card is an ace, it's low

} else if (card1 == 1 & !card2 %in% c(2, 14)) {

p_0 <- (1 - ((twos_left + aces_left) / cards_left)) / 11

deck <- data.table(card = c(seq(2, 14)),

prob = c(twos_left / cards_left,

rep(p_0, 11),

aces_left / cards_left))

# 1st card is a low ace, and second card is an ace or two

} else if (card1 == 1 & card2 == 14) {

p_0 <- (1 - ((twos_left + aces_left) / cards_left)) / 11

deck <- data.table(card = c(seq(2, 14)),

prob = c(twos_left / cards_left,

rep(p_0, 11),

aces_left / cards_left))

} else if (card1 == 1 & card2 == 2) {

p_0 <- (1 - ((twos_left + aces_left) / cards_left)) / 11

deck <- data.table(card = c(seq(2, 14)),

prob = c(twos_left / cards_left,

rep(p_0, 11),

aces_left / cards_left))

}

if (deck[, sum(prob) %>% round(2)] != 1){

warning("Probability does not sum to 1!")

}

return(deck)

}Here’s an example of the output of define_deck_odds().

deck1 <- define_deck_odds(4, 10, 2, 2, 40)

print(deck1)

## card prob

## 1: 2 0.05000000

## 2: 3 0.08571429

## 3: 4 0.06428571

## 4: 5 0.08571429

## 5: 6 0.08571429

## 6: 7 0.08571429

## 7: 8 0.08571429

## 8: 9 0.08571429

## 9: 10 0.06428571

## 10: 11 0.08571429

## 11: 12 0.08571429

## 12: 13 0.08571429

## 13: 14 0.05000000Now that we know the probability of drawing each card in the deck, we can pretty easily calculate the expected value of a given play by summing the probabilities of the three outcomes: outside, inside, or a sting. This is done with the function calculate_EV().

calculate_EV <- function(card1, card2, aces_left, twos_left, cards_left) {

deck <- define_deck_odds(card1, card2, aces_left, twos_left, cards_left)

min_card <- min(card1, card2)

max_card <- max(card1, card2)

prob_succeed <- sum(deck[card > min_card & card < max_card, prob])

prob_fail <- sum(deck[card < min_card | card > max_card, prob])

prob_stung <- sum(deck[card == min_card | card == max_card, prob])

expected_value <- prob_succeed - prob_fail - prob_stung * 2

return(expected_value)

} This returns the expected value of a given hand, where any value > 0 means we’re expected to make money, and any value < 0 means we’re expected to lose money.

A few examples:

calculate_EV(2, 10, 1, 1, 40)

# 2, 10, 1 ace and 1 Two left, 40 cards left## [1] 0.1459302calculate_EV(3, 14, 1, 1, 40)

# 2, Ace, 1 ace and 1 Two left, 40 cards left ## [1] 0.6761628calculate_EV(5, 10, 2, 2, 30)

# 5, 10, 2 Aces and 2 Twos left, 30 cards left## [1] -0.4634921calculate_EV(3, 3, 1, 1, 30)

# a good sanity check - can't make money if the two cards are equal## [1] -1.044444calculate_EV(1, 14, 0, 2, 40)

# the highest possible EV - guaranteed to make money if no aces left## [1] 1So, we now have a way to calculate the expected value based on all of our knowledge. Let’s now go to the extreme, and calculate the EV for every possible state we could run into. Note that in the following graphs, the expected value should be interpreted as “the expected amount gained per dollar bet.” In other words, an EV of -0.5 implies that for every dollar you bet, you will lose 50 cents.

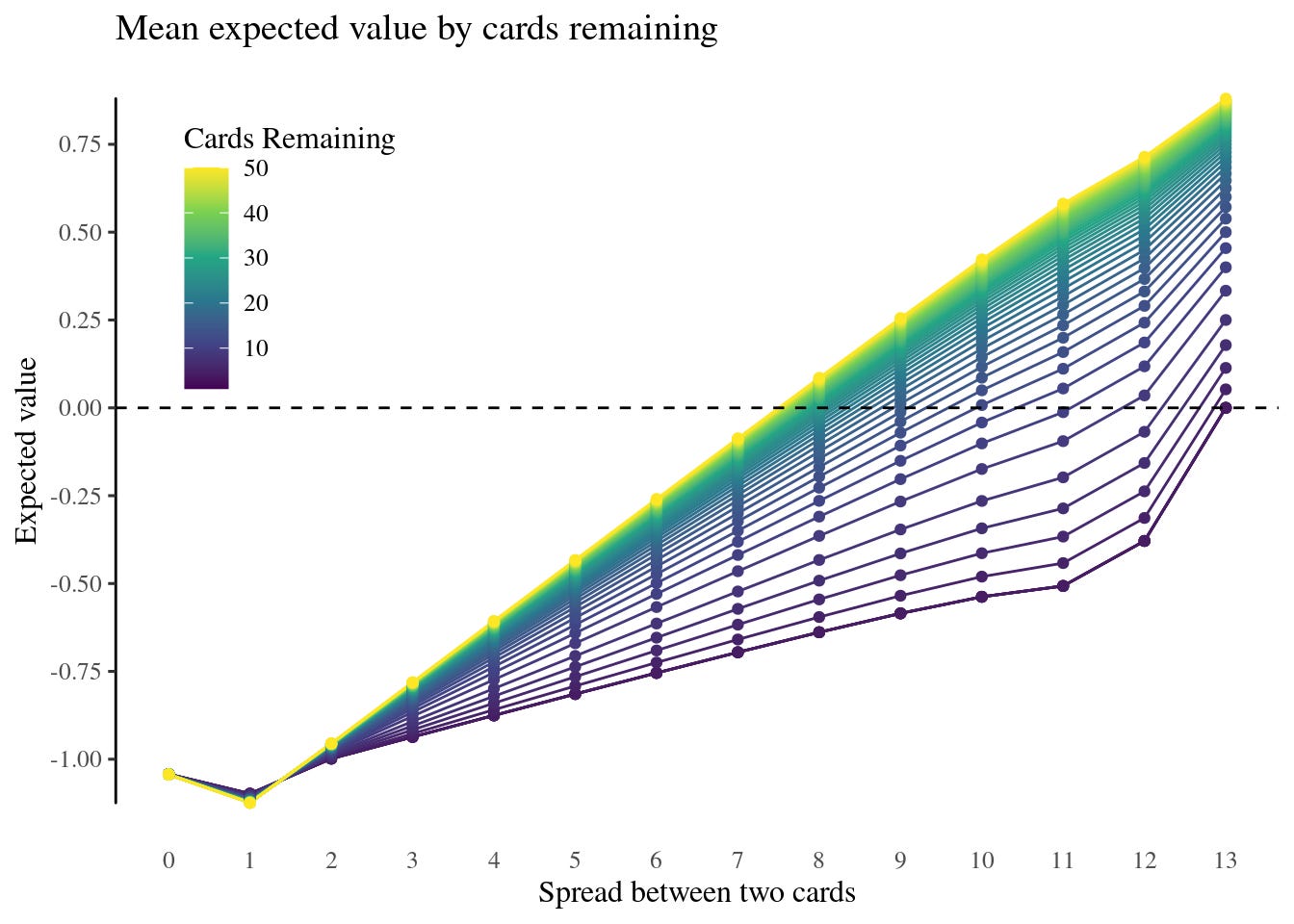

Let’s take a look at the mean expected value per cards remaining.

This is pretty surprising, at least to my intuition. The average expected value doesn’t even pop above 0 until you reach a spread of 8. This means that even a set of cards like 5-Queen or 6-King, which “feel” like quite a good bet to my intuition, are actually not very good in most circumstances.

So, if we’re at the betting table, what’s our strategy? One immediate takeaway is never bet if the spread is beneath 8. The expected value is always negative, regardless of how many aces, twos, or cards in the deck are left.

Another takeaway is that there aren’t many good bets, so if you get a decent bet, you should bet big. (I’ll explore this idea more fully in a future post, incorporating the Kelly criterion for determining how much to bet on any given hand) but just by looking at the number of dots above the 0-line compared to the total number of dots in the chart, you can see that you’re not likely to be dealt a winning hand very often. Remember that the game simply ends if the pot runs out, so in a sense you’re racing against the clock of other players, trying to get money out of the pot before everyone else. To that end, if you see a spread of 9 or more, and you know there aren’t many aces left, you should bet as much as you can. Otherwise, you’ll watch as everyone else takes pieces out of the pot, and you end up with nothing.

Finally, you’ll often lose money, even on good bets. I remember getting an Ace, followed by an Ace, to which I bet everything in the pot. The next card was an ace, meaning I got stung and owed double my bet. This can happen! The important thing is to realize that the bet was still good, even if the outcome was bad. This, of course, is fundamental to every game involving money and chance, and something every poker player must learn.

At the end of all this, I confess that I have yet to really use these methods to make any sort of money. I did a version of this analysis a while back, and still lost money playing the game! It’s one thing to sit here typing out clever results, but it’s another to actually be surrounded by other people and make the correct bet at the correct time.

Of course, our gambling weekends aren’t really about the money, it’s about seeing family. So, even if I forget everything I’ve written here, bet on a 2-9 spread and lose it all, the weekend would still be worth it.